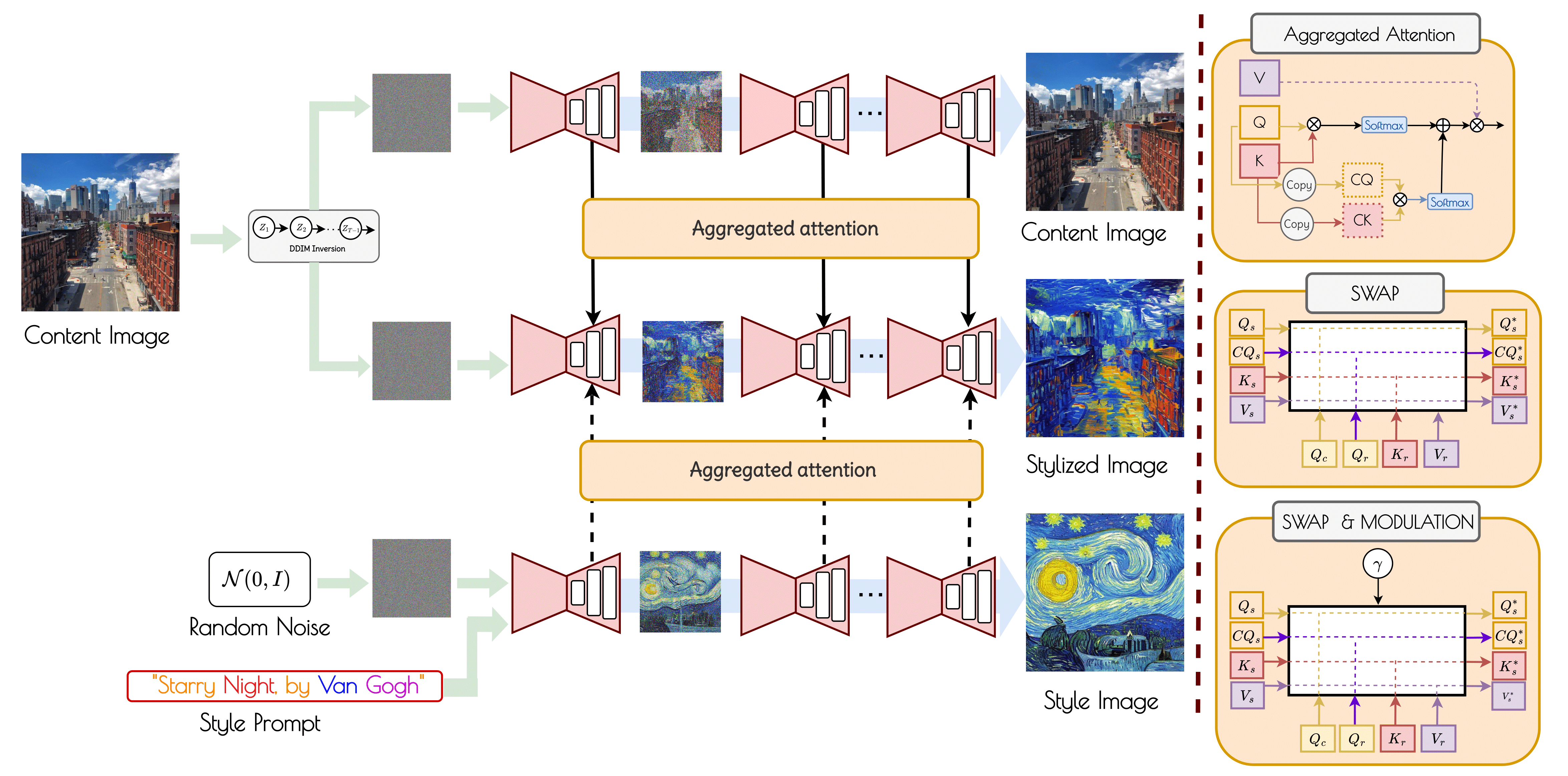

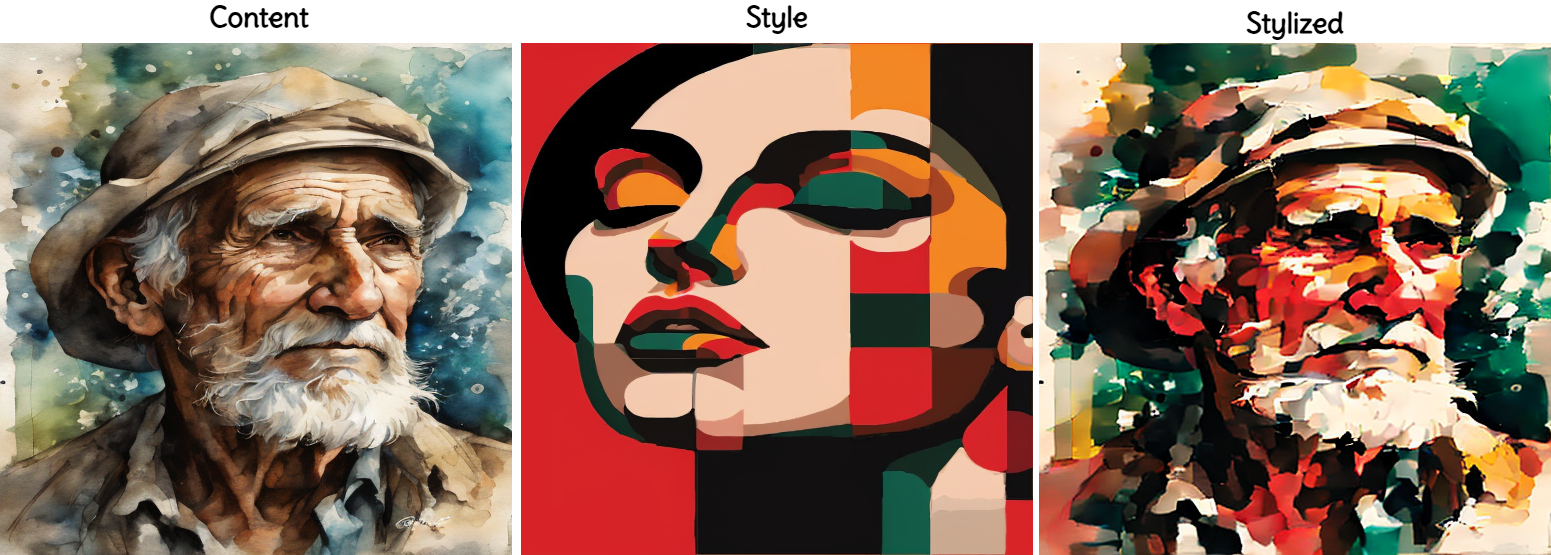

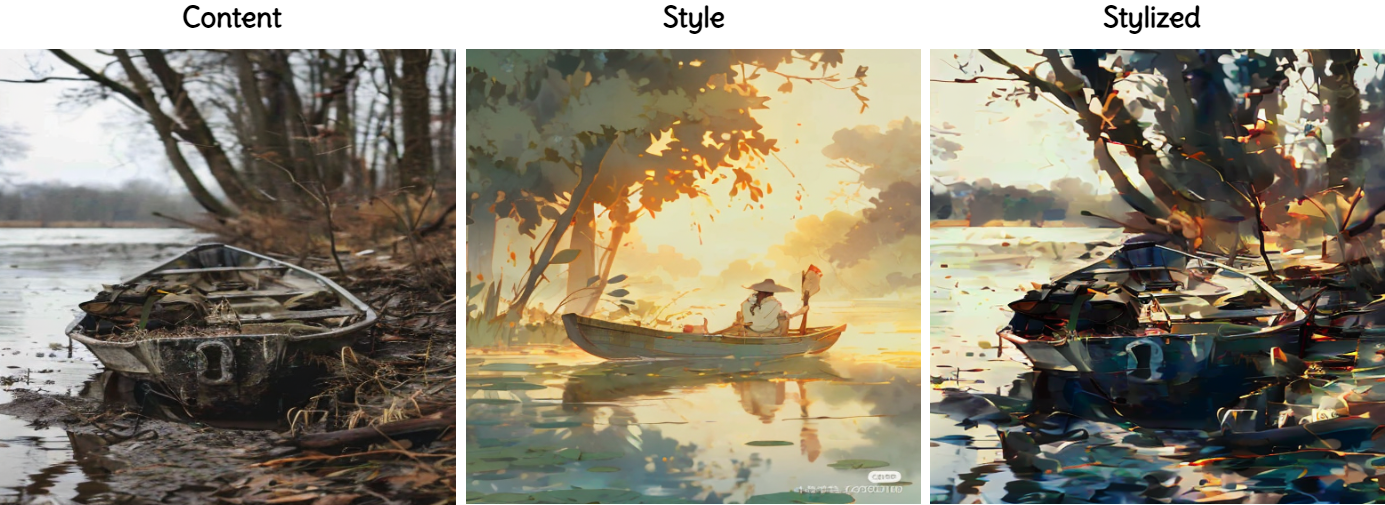

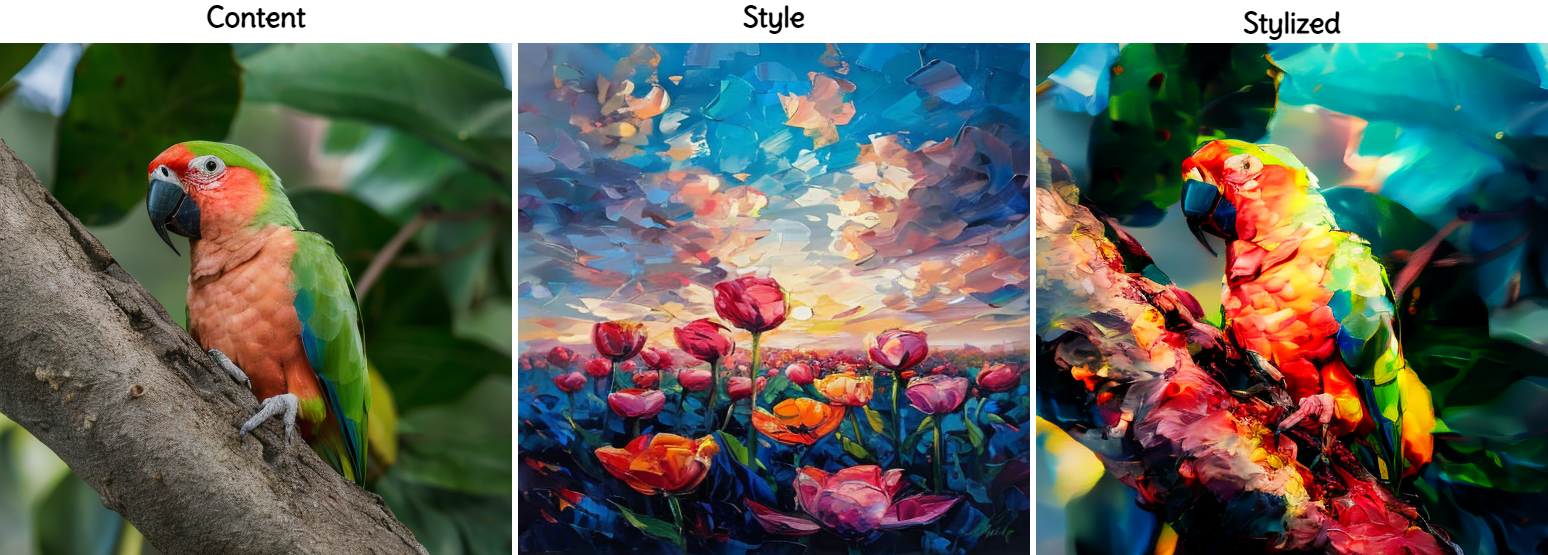

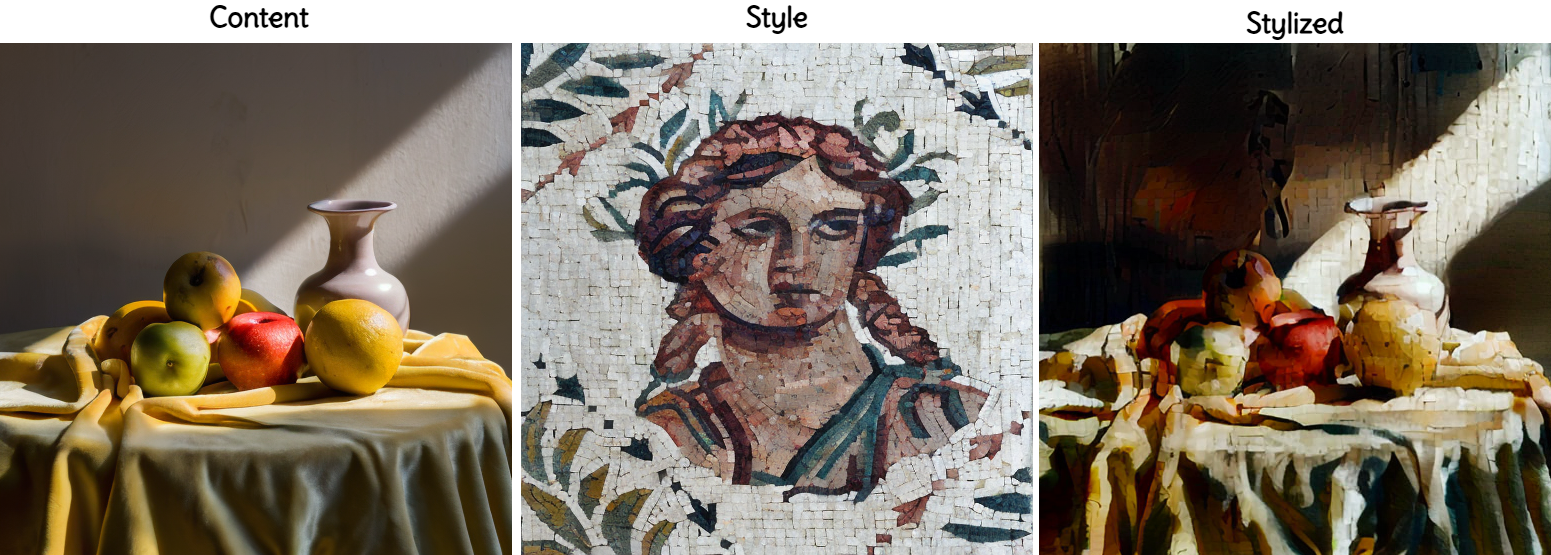

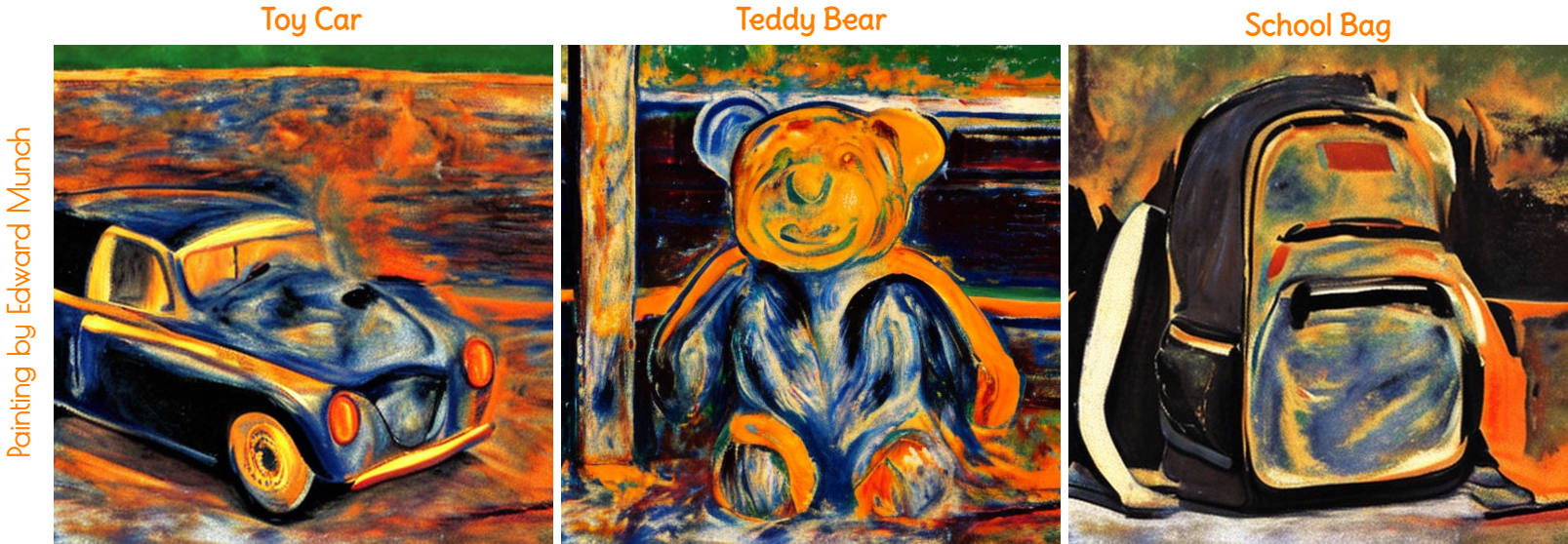

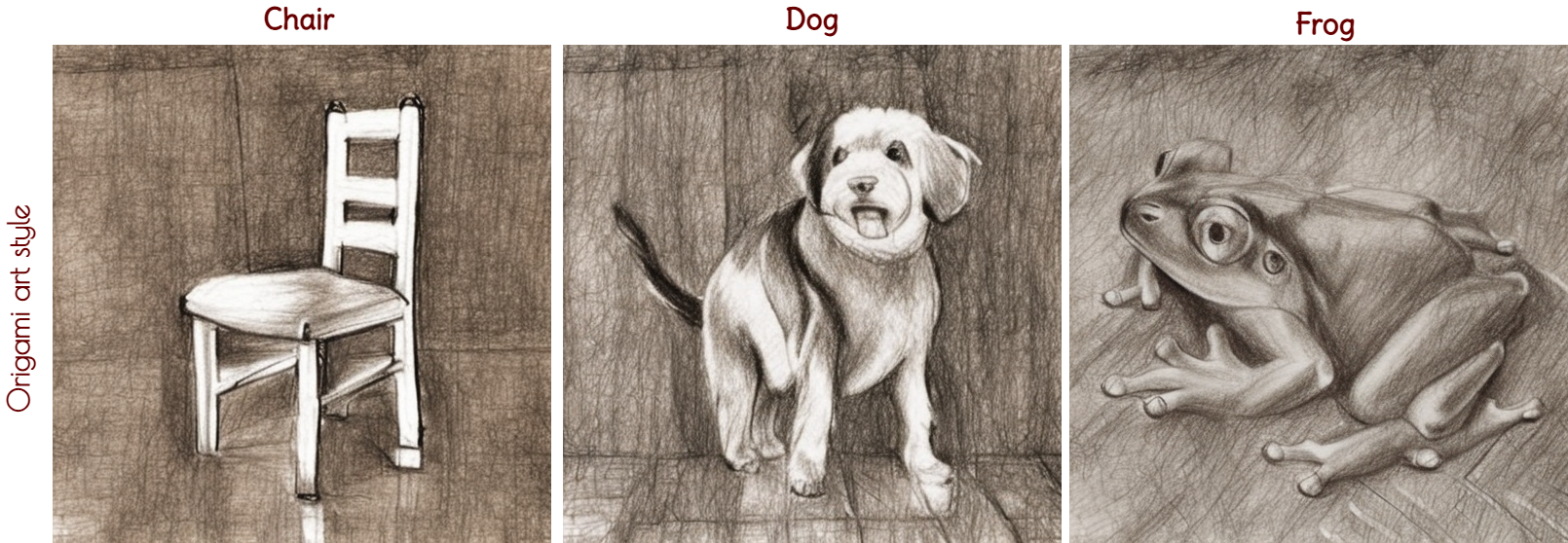

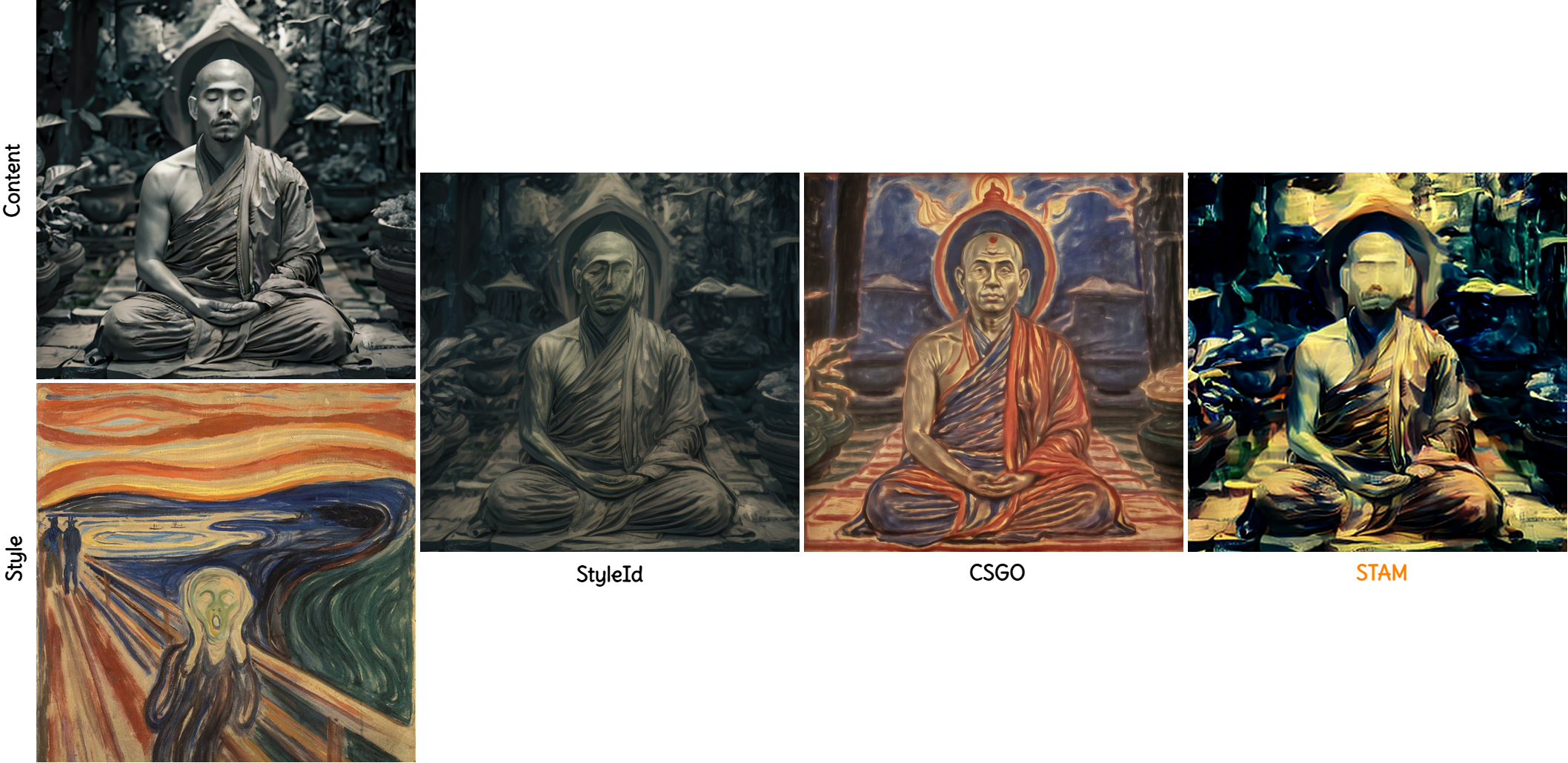

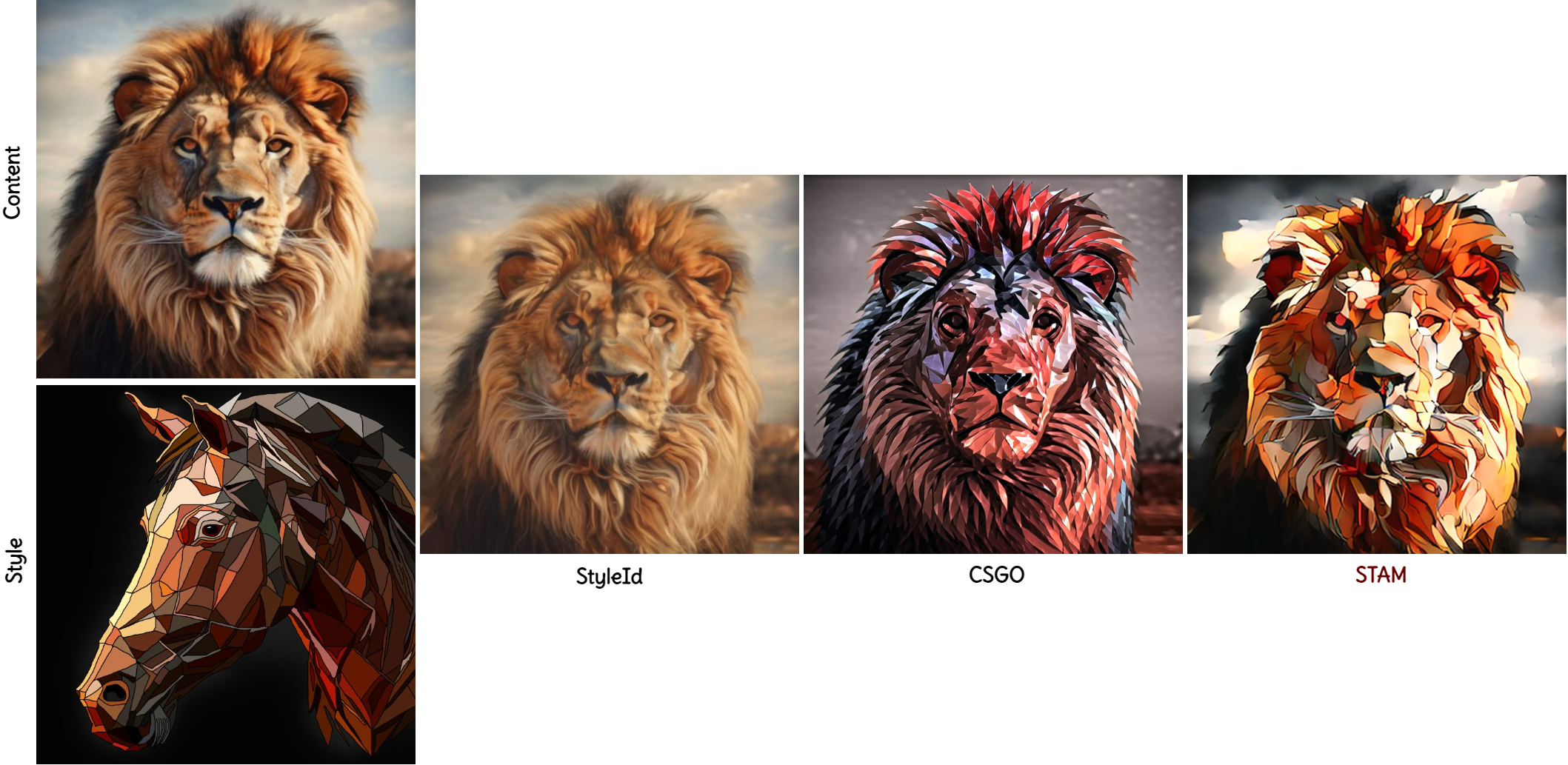

Diffusion models are capable of delivering many zero-shot applications in addition to stunning image generation, and style transfer is a popular choice among those applications. In contrast to classical style transfer studies, recent methods harness diffusion models, where decoupling between the source image and content image returns the stylized image after denoising steps. To further upscale the stylization performance, researchers integrate prompt guidance, adapters, or ControlNet within their style transfer pipeline. However, the style of an image is always subjective, and it is common for zero-shot diffusion stylization approaches to deliver undesired performances.

For instance, the tradeoff between style injection and content preservation often poses a great challenge, and the resultant stylized image deviates far away from the content image. We address both issues by integrating two efficient contributions: a) preserving content via dividing and aggregating the self-attention and b) maintaining the impact of style through modulating the attention components. Our approach can provide aesthetically appealing yet content-preserving style transfer performance through the combined effort of these contributions. Through extensive experimental studies, we validated our approach's qualitative and quantitative performance, and our approach shows a higher benchmark than the concurrent studies.

@article{Fahim2025STAM,

author = {Fahim, Masud An Nur Islam and Saqib, Nazmus and Boutillier and Jani},

title = {STAM: Zero-shot Style Transfer using Diffusion Model via Attention Modulation},

journal = {CVPR},

year = {2025},

}